In Feb. 2022, NIST published the results of a study that looked at outcomes of mock mobile and hard-drive examinations by digital forensic examiners. 394 individuals registered for the mobile test, and 77 participants completed the mobile case study. 450 individuals registered for the hard-drive test and 102 participants completed the hard drive case study. Participation in the study was voluntary.

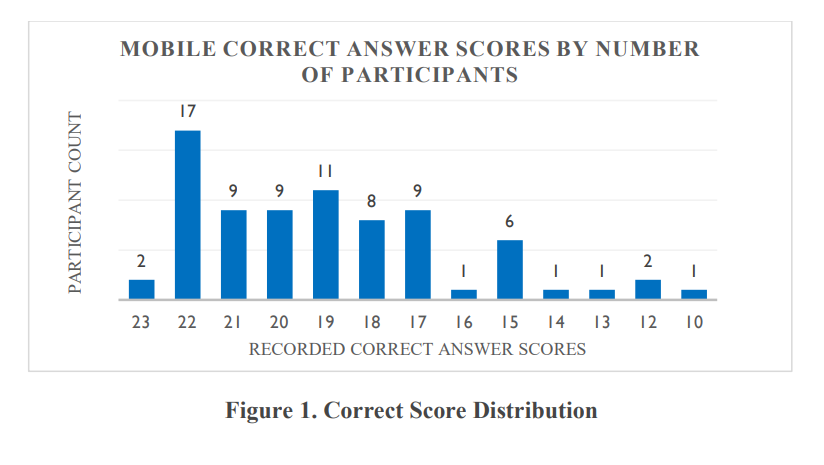

From my perspective, there was a lot of variation in the number of correct answers provided by participants. For the mobile case study, Figure 1 from the report shows the distribution of correct answers by number of participants. There were 24 questions total.

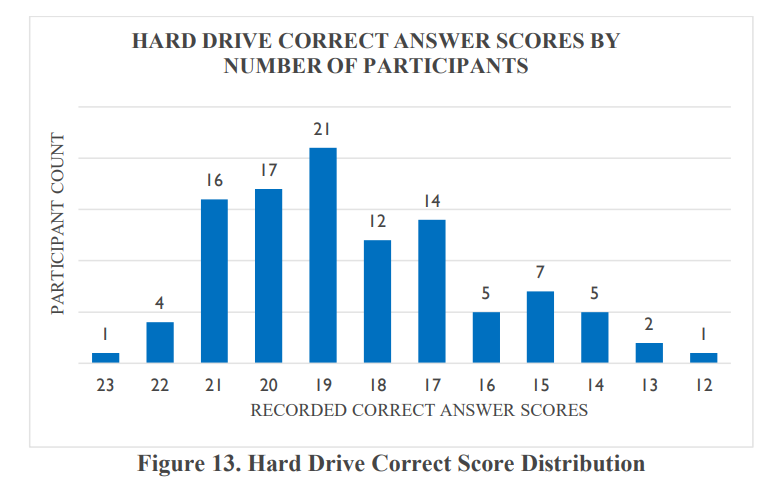

For the hard-drive case study, Figure 13 from the report shows the distribution of correct answers in the 24-question study.

The study attempted to look at the factors that would contribute to the results. Data was collected on participants’ workplace environment (including lab size, lab accreditation, and primary type of work), education, and work experience (including whether the participant works full time as a digital examiner, whether the participant has testified, how the participant was trained, whether the participant was proficiency tested, and whether the participant completed a digital forensic certification program). The study found that only attribute that correlated with improved score performance was the completion of a certification program.

So what should defenders do with this information? I think an important takeaway is that there is a lot of variation in the qualifications of examiners and the accuracy of the examinations that they perform. It makes sense for defenders to consider that complete information about the accuracy of examinations in this field is not known. The lack of error rates in this field is relevant for attorneys considering a 702/Daubert challenge to the foundational validity of this field.